Table of Contents

GerminationX Companion

Part of the germinationx game design

Lirec background

Through the Lirec project we have access to a state of the art AI system based on fatima, a multi agent system developed originally for the Fear Not game.

This system is capable of simulating multiple characters who can be programmed with differing emotional traits, and specific attitudes to other agents or objects. The agents are given goals and actions with which to achieve them. Objects, other agents and the results of actions are appraised with regard to the agent's goals and their emotional state is modified to suit. In robotic/artificial companion research the term “affective” is preferred to “emotional” as the former is less ambiguous and more defined than the latter.

The existing Lirec research is involved with examining one to one relationships between a human and an artificial companion, or many to one relationships (such as spirit of the building scenario). This game gives us an opportunity to examine relationships between many more people, with the accessibility of an online game.

Lirec AgentMind details

The AgentMind consists of multiple Java processes, a server providing the world and it's contents, and a client for each companion. The player is represented as another agent. All agents can perceive of the other agents are their actions and some external symbolic signals (representing, for example facial expression). In recent versions the agents form a “theory of mind” of the other agents - an approximation of the other's internal state based on their perception, including the player's agent.

In terms of scalability, ~10 agents can be run on a normal machine at this time. Each would be able to interact with multiple players at the same time, and each other. We would need to be able to limit the number of users interacting with each agent at a time.

Possible scenarios

Single agent

The simplest scenario is a single agent dispensing information and helping the player. This agent would have to be located in a single place in the game, where players would have to travel or ask for access to. They would be able to gain access if the number of existing interactions were low enough. Access to the helper/companion could also be treated as a reward, after completing some action.

The agent would be affected by the interactions with the players, and the state of the world. This could be used to aid the gameplay. For instance, perhaps extreme weather conditions would cause many players to ask for help - the agent would switch to a high level of activity to cope with all their requests, after which it would need rest - and become irritable if disturbed.

It could recognise players who are being particularly needy, and start to ignore them after some time, and conversely recognise players who were doing well to reward them somehow. Note - need to look into the STM/LTM storage constraints, perhaps some higher level symbolic information on players would be required.

Much of this type of interaction has been researched by INESC-ID in their iCat scenarios. Particularly interesting is where the companion is passively watching two people playing a game and is biased to one player, only congratulating them and displaying resentment when the other is winning.

Multiple agents

It may be possible to experiment with multiple agents. It seems like this could lead to some interesting experiences. It also makes sense in terms of scalability, allowing more people to engage in interactions at one time by spreading the load across separate machines (as each agent is running in a separate process).

Each agent could be given a different personality. Relationships could be designed between them, and the players would take part in a more dynamic situation. For example, some agents would be helpful, others less so, and possibly some not at all. The player would choose to “summon” or converse with a particular agent. If they were too busy engaged with a maximum number of users, then the player would be automatically allocated a less popular one. Agents would also be programmed to have a pre-existing like or dislike towards each other - and so would contradict each other, or show dislike towards objects (and perhaps opinions) belonging to other agents.

Fitting this into the game design

In order to make sense in the context of the game design, the companion or companions need to fit the concepts of permaculture, and the underlying themes of the game. This is essential if it is to make sense to the player.

One possibility is to use the concept of companion planting as a framework to build a set of helper characters. Each character would represent a species of plant, and it's relationships with the others would follow the relationships of the rules of companion planting - where some plants are advantageous to plant together and others are not. As this is the one of the key themes we wish to deal with in the game, making it present here creates a clearer message and explores it from an interesting angle. For instance, each character could dispense information on it's family of plants, and have a little knowledge about plants that affect it in the system of companion planting. In terms of interaction, these characters could resemble the popular conception of the Greek gods, and be able to be summoned to help or hinder you - yet having their own relationships and complexities that you are entangled with.

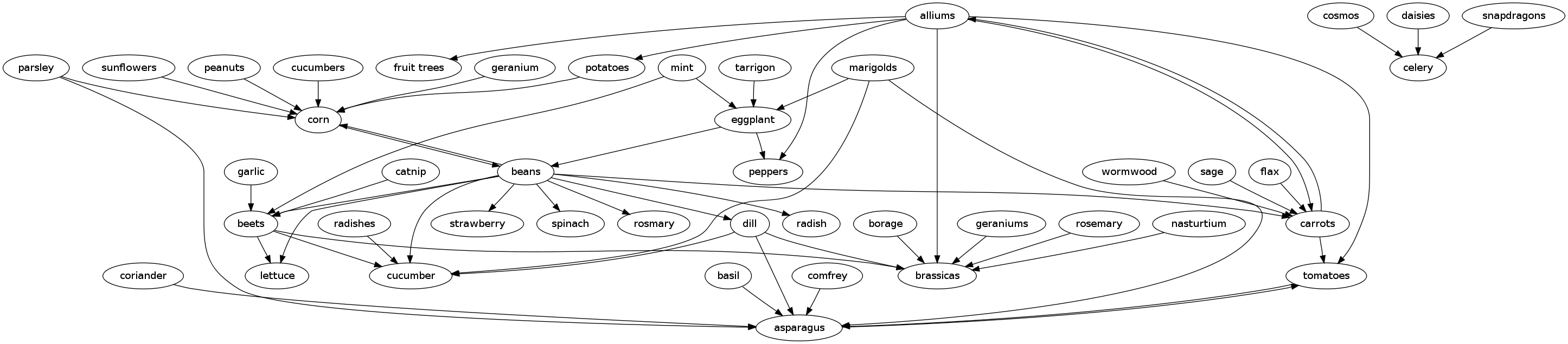

A diagram of the relationships between plants in companion planting, taken from infomation here: http://en.wikipedia.org/wiki/Companion_planting, where the arrows represent the relationship “is helpful to”.

See also companions in games for more ideas.